Hugging Face hosts over 2.5 million large language models (LLM) as of February 2026. However, identifying a subset relevant to specific use cases remains difficult, particularly in multilingual, industry-specific settings. At datAcxion, we encountered this challenge when developing an outbound voice-AI sales agent for a Turkish bank. While we faced various hurdles regarding voice activity detection (VAD), automatic speech recognition (ASR) in Turkish, user intent classification and next best action decisioning, text-to-voice (TTV) streaming, and doing all this under a sub-second latency requirement, this write-up focuses specifically on model selection.

Finding a proven LLM specifically trained for Turkish is challenging. While multilingual models exist, those augmented for Turkish banking often underperform. For instance, even models utilizing RAG or fine-tuning with Turkish banking documents, such as the Commensis LLM, did not meet our requirements.

We tested 36 Turkish-capable LLMs using product-specific prompt engineering in LM Studio on a Mac Studio M3 Ultra (512 GB uRAM). Our evaluation was based on three primary constraints:

- Latency: A bank-mandated SLA of at most 3 seconds per response (demo achieved 1.1s).

- Deployability: Necessary for on-premise deployment to meet the Turkish GDPR requirements.

- Accuracy: A goal of handling 90% of cases without referral to a live agent.

To rank these models, we used the following scorecard:

LLM Rank = Deployability + Efficiency/Scale + 0.5 * Latency +

3 * Accuracy/Quality.

Definitions:

- Deployability: Decile ranking (1-10) based on model size in billions of parameters (inverse; higher is better).

- Efficiency/Scale: Decile ranking (1-10) of tokens per second per billion parameters.

- Latency: Decile ranking (1-10) of total elapsed time, including thinking time, time to first token, and output time (inverse; higher is better).

- Semantic Quality/Language Accuracy: Decile ranking (1-10) based on output volume in terms of the number of distinct rows, linguistic quality (e.g., lack of typos or non-Turkish words), and alignment with internal taxonomy.

In our weighting, deployability and efficiency each carry a weight of 1. Deployability may be adjusted based on specific platform constraints — potentially lower for cloud operations or higher for more constrained environments. Rapid processing is essential to assess user intent and output tokens within our required SLA. While speed is important, we have demoted the latency metric’s individual weight. Smaller, non-reasoning models are naturally faster, but they often produce lower-quality results. We wanted to ensure we are not inadvertently rewarding poor output simply because it is delivered quickly. Linguistic and semantic quality/accuracy was our most critical internal priority. Inaccurate or incoherent responses negatively impact the user experience, increase referral rates to live sales agents, and can ultimately damage the brand perception. The results of our evaluation are detailed in the table below:

| Model Name | Architecture | Size in B-params | Deployability Decile | Efficiency Decile | Latency Decile | Quality Decile | Scorecard (D+E+0.5*L+3*Q) | |

| 1 | turkish-gemma-9b-t1 | Gemma-2 | 9 | 8 | 7 | 2 | 10 | 44 |

| 2 | qwen3-30b-a3b-thinking-2507-claude-4.5-sonnet-high-reasoning-distill | Qwen-3-MoE | 30 | 6 | 6 | 8 | 8 | 40 |

| 3 | gemma3-turkish-augment-ft | Gemma-3 | 4 | 10 | 10 | 9 | 5 | 39 |

| 4 | gpt-oss-120b-mlx | GPT-OSS | 120 | 1 | 5 | 6 | 10 | 39 |

| 5 | gemma-3-27b-it | Gemma-3 | 27 | 6 | 5 | 3 | 8 | 38 |

| 6 | qwen3-14b-claude-sonnet-4.5-reasoning-distill | Qwen-3 | 14 | 7 | 7 | 5 | 6 | 33 |

| 7 | minimax/minimax-m2 | Minimax-2-MoE-A10B | 230 | 1 | 3 | 4 | 9 | 33 |

| 8 | olmo-3-32b-think | Olmo-3 | 32 | 5 | 5 | 0 | 7 | 32 |

| 9 | turkish-llama-8b-instruct-v0.1 | Llama | 8 | 9 | 8 | 9 | 3 | 32 |

| 10 | glm-4.7 | GLM-4-MoE | 106 | 2 | 2 | 1 | 9 | 31 |

| 11 | qwq-32b | Qwen-2 | 32 | 5 | 3 | 0 | 7 | 31 |

| 12 | tongyi-deepresearch-30b-a3b-mlx | Qwen-3-MoE-A3B | 30 | 6 | 6 | 8 | 5 | 30 |

| 13 | intellect-3 | GLM-4-MoE | 106 | 2 | 4 | 5 | 7 | 29 |

| 14 | seed-oss-36b | Seed-OSS | 36 | 4 | 4 | 1 | 7 | 28 |

| 15 | teknofest-2025-turkish-edu-v2-i1 | Qwen-3 | 8 | 9 | 10 | 7 | 1 | 26 |

| 16 | baidu-ernie-4.5-21b-a3b | Ernie4-5-MoE | 21 | 7 | 8 | 10 | 2 | 25 |

| 17 | commencis-llm | Llama | 7 | 10 | 9 | 10 | 0 | 24 |

| 18 | cogito-v2-preview-llama-70b-mlx | Llama | 70 | 4 | 2 | 5 | 5 | 23 |

| 19 | turkish-article-abstracts-dataset-bb-mistral-model-v1-multi | Llama | 12 | 7 | 7 | 7 | 2 | 23 |

| 20 | mistral-7b-instruct-v0.2-turkish | Llama | 8 | 9 | 9 | 8 | 0 | 23 |

| 21 | deepseek-r1-0528-qwen3-8b | Qwen-3 | 8 | 9 | 8 | 3 | 1 | 21 |

| 22 | deepseek-v3-0324 | Deepseek-3 | 671 | 0 | 0 | 4 | 6 | 21 |

| 23 | hermes-4-70b | Llama | 70 | 4 | 2 | 6 | 3 | 19 |

| 24 | apertus-70b-instruct-2509-qx64-mlx | Apertus | 70 | 4 | 3 | 7 | 2 | 16 |

| 25 | c4ai-command-r-plus | Command-R | 104 | 2 | 1 | 2 | 4 | 16 |

| 26 | qwen3-235b-a22b | Qwen | 235 | 0 | 1 | 2 | 4 | 15 |

| 27 | grok-2 | Grok | 89 | 3 | 0 | 3 | 3 | 13 |

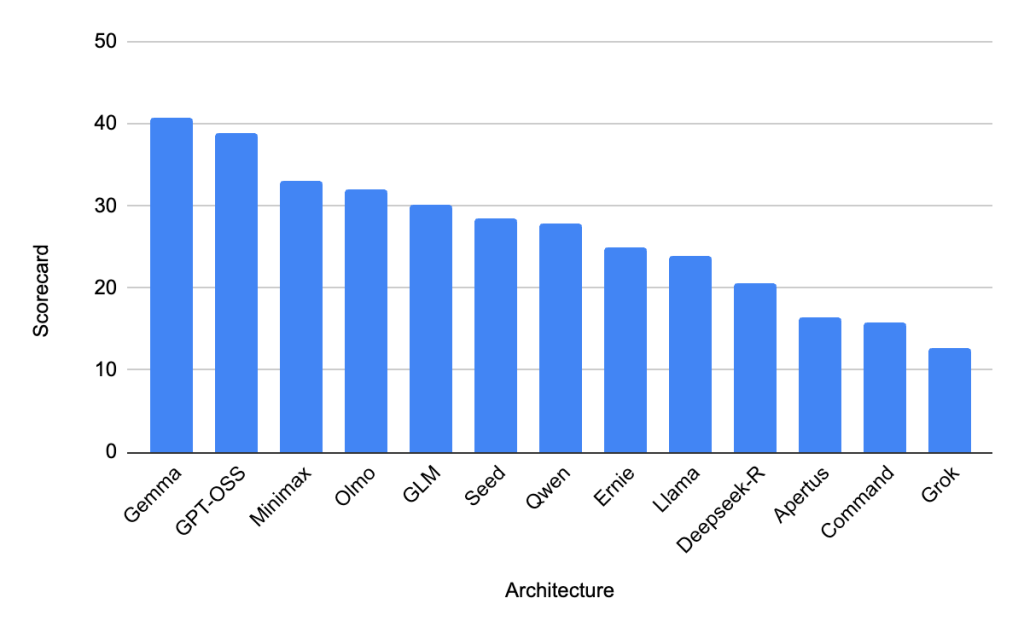

Before this evaluation we were using Gemma-3-27B-IT. Following the evaluation, we switched to Qwen3-30B-A3B-Thinking-2507-Claude-4.5-Sonnet-High-Reasoning-Distil. For offline demos on a laptop or in hardware constrained environments, we recommend using Turkish-Gemma-9B-T1. In terms of the model family for this particular application, Gemma ranked at the top followed by GPT-OSS. Though widely used in Turkish language applications, Qwen and Llama family of models depicted only average performance.

Note:

- 9 out of 36 models have been eliminated from the list as they did not produce output or produced inconsistent output across consecutive runs.

Leave a Reply